FastAI Quickstart: Segmentation

Table of Contents

Beginning

This is a look at the part of the fastai Quick Start that demonstrates image segmentation (Wikipedia Article). The goal here is to break images up into sub-parts.

The top post for the quickstart posts is this one and the previous post was the cat image identifier.

Imports

from fastai.vision.all import (

SegmentationDataLoaders,

SegmentationInterpretation,

URLs,

get_image_files,

resnet34,

unet_learner,

untar_data,

)

import numpy

Middle

Data, Model, Training

The dataset is a subset of the Cambridge-Driving Labeled Video Database (CamVid).

path = untar_data(URLs.CAMVID_TINY)

loader = SegmentationDataLoaders.from_label_func(

path, bs=8, fnames=get_image_files(path/"images"),

label_func = lambda o: path/'labels'/f'{o.stem}_P{o.suffix}',

codes = numpy.loadtxt(path/'codes.txt', dtype=str)

)

learner = unet_learner(loader, resnet34)

with learner.no_bar():

learner.fine_tune(8)

[0, 3.394050359725952, 2.541146755218506, '00:01'] [0, 1.87003755569458, 1.5329511165618896, '00:01'] [1, 1.567323088645935, 1.4149396419525146, '00:01'] [2, 1.3944206237792969, 1.1255743503570557, '00:01'] [3, 1.2387481927871704, 0.8764406442642212, '00:01'] [4, 1.1080732345581055, 0.8167174458503723, '00:01'] [5, 1.0044615268707275, 0.77195143699646, '00:01'] [6, 0.91986483335495, 0.7509599924087524, '00:01'] [7, 0.8545125722885132, 0.7430445551872253, '00:01']

| Column | Label |

|---|---|

| 0 | epoch |

| 1 | train_loss |

| 2 | valid_loss |

| 3 | error_rate |

| 4 | time |

with learner.no_bar():

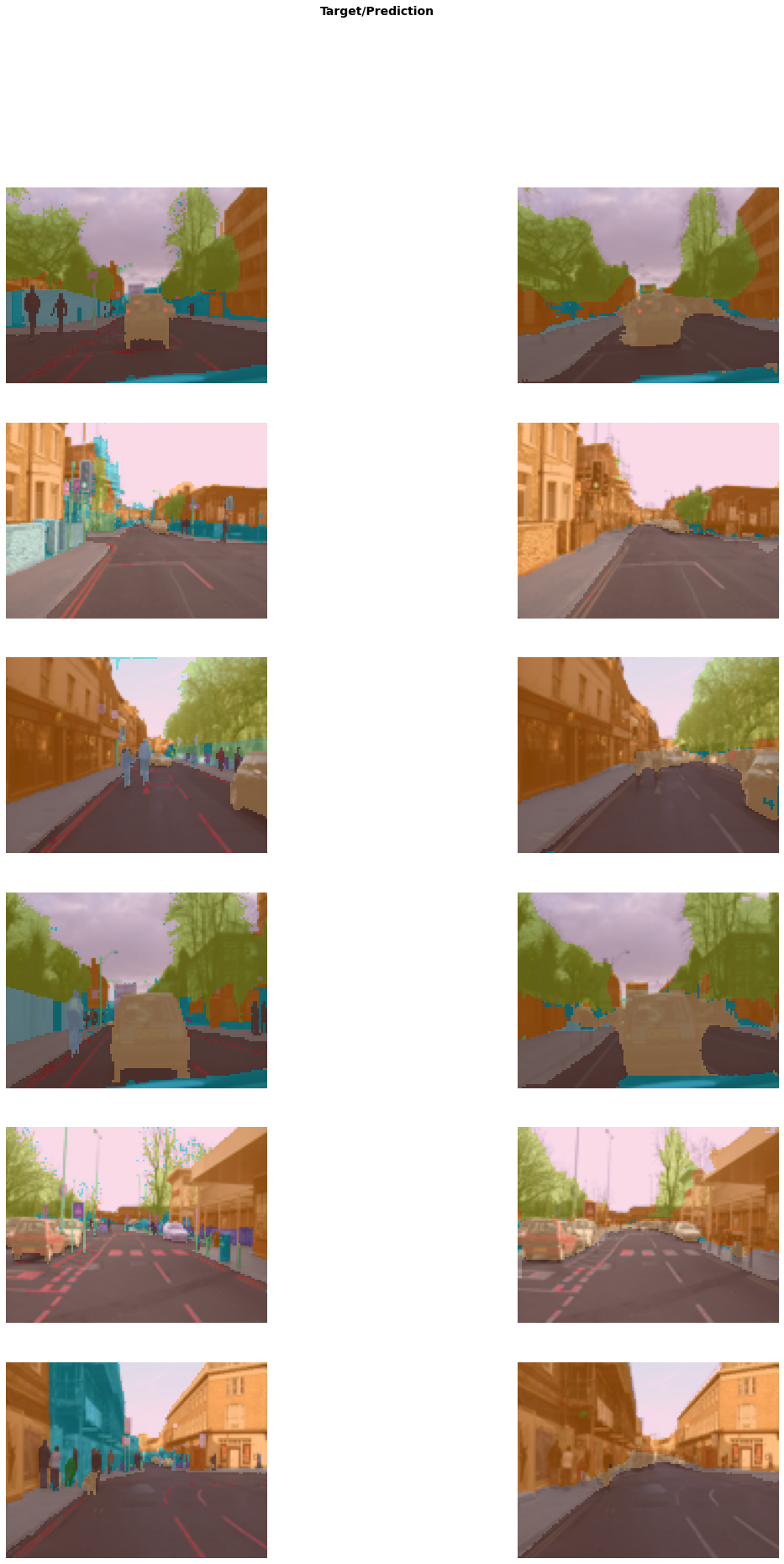

learner.show_results(max_n=6, figsize=(20, 30))

Note: The example ends with a plot of the images that had the greatest loss, but out of the box it doesn't work in this org-mode setup so I'll skip it for now, since I think it will be a bit of a slog figuring out how to get it working.

End

The top post for the quickstart posts is this one and the next post will be on sentiment analysis.