Horses And Humans

Table of Contents

Beginning

Imports

Python

from functools import partial

from pathlib import Path

import random

import zipfile

PyPi

from expects import (

be_true,

expect,

)

from holoviews.operation.datashader import datashade

from keras import backend

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.preprocessing.image import (ImageDataGenerator,

img_to_array, load_img)

import cv2

import holoviews

import matplotlib.pyplot as pyplot

import numpy

import requests

import tensorflow

My Stuff

from graeae import EmbedHoloviews

Embed = partial(EmbedHoloviews,

folder_path="../../files/posts/keras/horses-and-humans/")

holoviews.extension("bokeh")

Middle

The Data Set

OUTPUT = "~/data/datasets/images/horse-or-human/training/"

output_path = Path(OUTPUT).expanduser()

if not output_path.is_dir():

print("Downloading the images")

URL = ("https://storage.googleapis.com/"

"laurencemoroney-blog.appspot.com/"

"horse-or-human.zip")

response = requests.get(URL)

ZIP = "/tmp/horse-or-human.zip"

with open(ZIP, "wb") as writer:

writer.write(response.content)

print(f"Downloaded zip to {ZIP}")

with zipfile.ZipFile(ZIP, "r") as unzipper:

unzipper.extractall(output_path)

else:

print("Files exist, not downloading")

expect(output_path.is_dir()).to(be_true)

for thing in output_path.iterdir():

print(thing)

data_path = output_path

Files exist, not downloading /home/athena/data/datasets/images/horse-or-human/training/horses /home/athena/data/datasets/images/horse-or-human/training/humans

The convention for training models for computer vision appears to be that you use the folder names to label the contents of the images within them. In this case we have horses and humans.

Here's what some of the files themselves are named.

horses_path = output_path/"horses"

humans_path = output_path/"humans"

for path in (horses_path, humans_path):

print(path.name)

for index, image in enumerate(path.iterdir()):

print(f"File: {image.name}")

if index == 9:

break

print()

horses File: horse48-5.png File: horse45-8.png File: horse13-5.png File: horse34-4.png File: horse46-5.png File: horse02-3.png File: horse06-3.png File: horse32-1.png File: horse25-3.png File: horse04-3.png humans File: human01-07.png File: human02-11.png File: human13-07.png File: human10-10.png File: human15-06.png File: human05-15.png File: human06-18.png File: human16-28.png File: human02-24.png File: human10-05.png

So, in this case you can tell what they are from the file-names as well. How many images are there?

horse_files = list(horses_path.iterdir())

human_files = list(humans_path.iterdir())

print(f"Horse Images: {len(horse_files)}")

print(f"Human Images: {len(human_files)})")

print(f"Image Shape: {pyplot.imread(str(horse_files[0])).shape}")

Horse Images: 500 Human Images: 527) Image Shape: (300, 300, 4)

This is sort of a small data-set, and it's odd that there are more humans than horses. Let's see what some of them look like. I'm assuming all the files have the same shape. In this case it looks like they are 300 x 300 with four channels (RGB and alpha?).

height = width = 300

count = 4

columns = 2

horse_plots = [datashade(holoviews.RGB.load_image(str(horse)).opts(

height=height,

width=width,

))

for horse in horse_files[:count]]

human_plots = [datashade(holoviews.RGB.load_image(str(human))).opts(

height=height,

width=width,

)

for human in human_files[:count]]

plot = holoviews.Layout(horse_plots + human_plots).cols(2).opts(

title="Horses and Humans")

Embed(plot=plot, file_name="horses_and_humans",

height_in_pixels=900)()

As you can see, the people in the images aren't really humans (and it may not be so obvious, but they aren't horses either), these are computer-generated images.

A Model

As before, the model will be a sequential model with convolutional layers. In this case we'll have five convolutional layers before passing the convolved images to the fully-connected layer. Although my inspection showed that the images have 4 channels, the model in the example only uses 3.

Also, in this case we are doing a binary classification (it's either a human or a horse, so instead of the softmax activation function on the output layer we're using a Sigmoid function (documentation link).

model = tensorflow.keras.models.Sequential()

The Input Layer

The input layer is a Convolutional layer with 16 layers and a 3 x 3 filter (all the convolutions use the same filter shape). All the convolutional layers are also followed by a max-pooling layer that halves their size.

model.add(tensorflow.keras.layers.Conv2D(16, (3,3),

activation='relu',

input_shape=(300, 300, 3)))

model.add(tensorflow.keras.layers.MaxPooling2D(2, 2))

The Rest Of The Convolutional Layers

The remaining convolutional layers increase the depth by doubling until they reach 64.

# The second convolution

model.add(tensorflow.keras.layers.Conv2D(32, (3,3),

activation='relu'))

model.add(tensorflow.keras.layers.MaxPooling2D(2,2))

# The third convolution

model.add(tensorflow.keras.layers.Conv2D(64, (3,3),

activation='relu'))

model.add(tensorflow.keras.layers.MaxPooling2D(2,2))

# The fourth convolution

model.add(tensorflow.keras.layers.Conv2D(64, (3,3),

activation='relu'))

model.add(tensorflow.keras.layers.MaxPooling2D(2,2))

# The fifth convolution

model.add(tensorflow.keras.layers.Conv2D(64, (3,3),

activation='relu'))

model.add(tensorflow.keras.layers.MaxPooling2D(2,2))

The Fully Connected Layer

Once we have the convolved version of our image, we feed it into the fully-connected layer to get a classification.

First we flatten the input into a vector.

model.add(tensorflow.keras.layers.Flatten())

Then we feed the input into a 512 neuron fully-connected layer.

model.add(tensorflow.keras.layers.Dense(512, activation='relu'))

And now we get to our output layer which makes the prediction of whether the image is a human or a horse.

model.add(tensorflow.keras.layers.Dense(1, activation='sigmoid'))

One thing that's not so obvious is what the output means - is it predicting that it's a human or that it's a horse? There isn't really anything to indicate which is which. Presumably, like the case with the MNIST and Fashion MNIST, the alphabetical ordering of the folders is what determines what we're predicting.

A Summary of the Model.

print(model.summary())

Model: "sequential_2" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_10 (Conv2D) (None, 298, 298, 16) 448 _________________________________________________________________ max_pooling2d_10 (MaxPooling (None, 149, 149, 16) 0 _________________________________________________________________ conv2d_11 (Conv2D) (None, 147, 147, 32) 4640 _________________________________________________________________ max_pooling2d_11 (MaxPooling (None, 73, 73, 32) 0 _________________________________________________________________ conv2d_12 (Conv2D) (None, 71, 71, 64) 18496 _________________________________________________________________ max_pooling2d_12 (MaxPooling (None, 35, 35, 64) 0 _________________________________________________________________ conv2d_13 (Conv2D) (None, 33, 33, 64) 36928 _________________________________________________________________ max_pooling2d_13 (MaxPooling (None, 16, 16, 64) 0 _________________________________________________________________ conv2d_14 (Conv2D) (None, 14, 14, 64) 36928 _________________________________________________________________ max_pooling2d_14 (MaxPooling (None, 7, 7, 64) 0 _________________________________________________________________ flatten_2 (Flatten) (None, 3136) 0 _________________________________________________________________ dense_5 (Dense) (None, 512) 1606144 _________________________________________________________________ dense_6 (Dense) (None, 1) 513 ================================================================= Total params: 1,704,097 Trainable params: 1,704,097 Non-trainable params: 0 _________________________________________________________________ None

That's a lot of parameters… It's interesting to note that by the time the data gets fed into the Flatten layer it has been reduced to a 7 x 7 x 64 matrix.

print(f"300 x 300 x 3 = {300 * 300 * 3:,}")

300 x 300 x 3 = 270,000

So the original input has been reduced form 270,000 pixels to 3,136 when it gets to the fully-connected layer.

Compile the Model

The optimizer we're going to use is the RMSprop optimizer, which, unlike SGD, tunes the learning rate as it progresses. Also, since we only have two categories, the loss function will be binary crossentropy. Our metric will once again be accuracy.

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(lr=0.001),

metrics=['acc'])

Transform the Data

We're going to use the ImageDataGenerator to preprocess the images to get them to normalized and batched. This class also supports transforming the images to create more variety in the training set.

training_data_generator = ImageDataGenerator(rescale=1/255)

The flow_from_directory method takes a path to the directory of images and generates batches of augmented data.

training_batches = training_data_generator.flow_from_directory(

data_path,

target_size=(300, 300),

batch_size=128,

class_mode='binary')

Found 1027 images belonging to 2 classes.

Training the Model

history = model.fit_generator(

training_batches,

steps_per_epoch=8,

epochs=15,

verbose=2)

Epoch 1/15 8/8 - 5s - loss: 0.7879 - acc: 0.5732 Epoch 2/15 8/8 - 4s - loss: 0.7427 - acc: 0.6615 Epoch 3/15 8/8 - 4s - loss: 0.8984 - acc: 0.6897 Epoch 4/15 8/8 - 4s - loss: 0.3973 - acc: 0.8165 Epoch 5/15 8/8 - 4s - loss: 0.2011 - acc: 0.9188 Epoch 6/15 8/8 - 5s - loss: 1.2254 - acc: 0.7373 Epoch 7/15 8/8 - 4s - loss: 0.2228 - acc: 0.8902 Epoch 8/15 8/8 - 4s - loss: 0.1798 - acc: 0.9333 Epoch 9/15 8/8 - 5s - loss: 0.2079 - acc: 0.9287 Epoch 10/15 8/8 - 4s - loss: 0.3128 - acc: 0.8999 Epoch 11/15 8/8 - 4s - loss: 0.0782 - acc: 0.9722 Epoch 12/15 8/8 - 4s - loss: 0.0683 - acc: 0.9711 Epoch 13/15 8/8 - 4s - loss: 0.1263 - acc: 0.9789 Epoch 14/15 8/8 - 5s - loss: 0.6828 - acc: 0.8574 Epoch 15/15 8/8 - 4s - loss: 0.0453 - acc: 0.9855

The training loss is very low and we seem to have reached 100% accuracy.

Looking At Some Predictions

test_path = Path("~/test_images/").expanduser()

height = width = 400

plots = [datashade(holoviews.RGB.load_image(str(path))).opts(

title=f"{path.name}",

height=height,

width=width

) for path in test_path.iterdir()]

plot = holoviews.Layout(plots).cols(2).opts(title="Test Images")

Embed(plot=plot, file_name="test_images", height_in_pixels=900)()

Horse

target_size = (300, 300)

images = (("horse.jpg", "Horse"),

("centaur.jpg", "Centaur"),

("tomb_figure.jpg", "Statue of a Man Riding a Horse"),

("rembrandt.jpg", "Woman"))

for filename, label in images:

loaded = cv2.imread(str(test_path/filename))

x = cv2.resize(loaded, target_size)

x = numpy.reshape(x, (1, 300, 300, 3))

prediction = model.predict(x)

predicted = "human" if prediction[0] > 0.5 else "horse"

print(f"The {label} is a {predicted}.")

The Horse is a horse. The Centaur is a horse. The Statue of a Man Riding a Horse is a human. The Woman is a horse.

Strangely, the model predicted the woman was a horse.

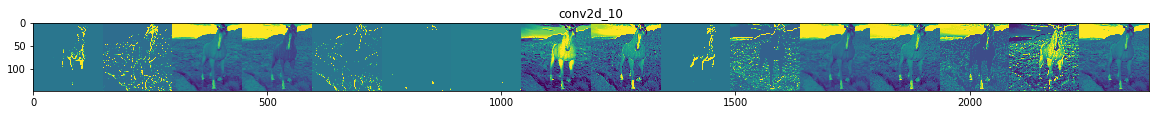

Visualizing The Layer Outputs

outputs = [layer.output for layer in model.layers[1:]]

new_model = Model(inputs=model.input, outputs=outputs)

image_path = random.choice(horse_files + human_files)

image = load_img(image_path, target_size=target_size)

x = img_to_array(image)

x = x.reshape((1,) + x.shape)

x /= 255.

predictions = new_model.predict(x)

layer_names = [layer.name for layer in model.layers]

for layer_name, feature_map in zip(layer_names, predictions):

if len(feature_map.shape) == 4:

# Just do this for the conv / maxpool layers, not the fully-connected layers

n_features = feature_map.shape[-1] # number of features in feature map

# The feature map has shape (1, size, size, n_features)

size = feature_map.shape[1]

# We will tile our images in this matrix

display_grid = numpy.zeros((size, size * n_features))

for i in range(n_features):

# Postprocess the feature to make it visually palatable

x = feature_map[0, :, :, i]

x -= x.mean()

x /= x.std()

x *= 64

x += 128

x = numpy.clip(x, 0, 255).astype('uint8')

# We'll tile each filter into this big horizontal grid

display_grid[:, i * size : (i + 1) * size] = x

# Display the grid

scale = 20. / n_features

pyplot.figure(figsize=(scale * n_features, scale))

pyplot.title(layer_name)

pyplot.grid(False)

pyplot.imshow(display_grid, aspect='auto', cmap='viridis')

Some of the images seem blank (or nearly so). It's hard to really interpret what's going on here.

End

Source

This is a walk-through of the Course 1 - Part 8 - Lesson 2 - Notebook.ipynb on github.