Softmax

Table of Contents

What is the Softmax Function?

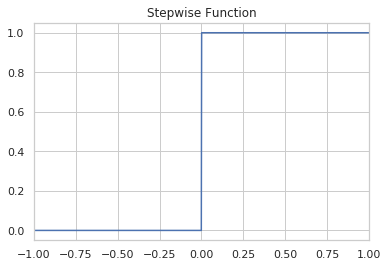

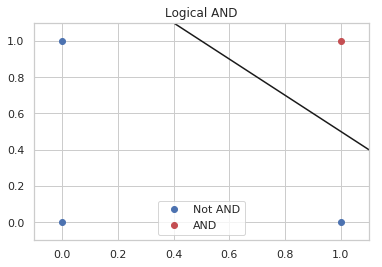

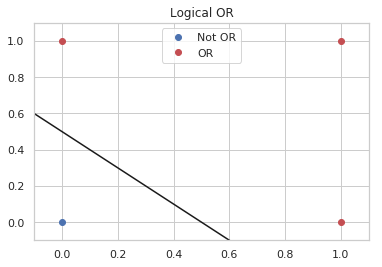

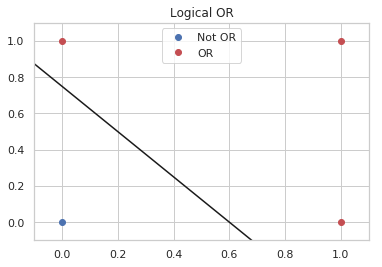

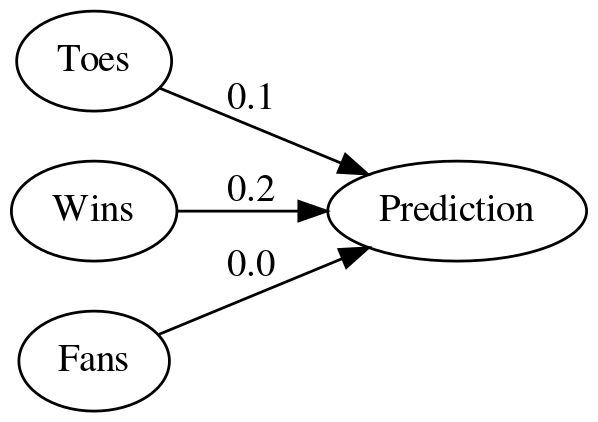

With the stepwise and logistic function you are limited to binary classifications. The softmax function is a generalization of the logistic (sigmoid) function that lets you choose between multiple categories.

A classification problem

What animal did you see?

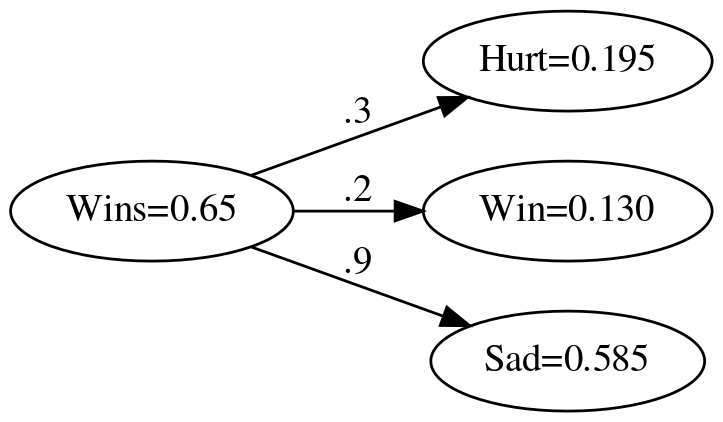

We have three animals and the probabilities that the animal you saw are the following:

- P(duck) = 0.67

- P(beaver) = 0.24

- P(walrus) = 0.09

We count the occurrence of animals we see and get these counts.

| Animal | Count |

|---|---|

| Duck | 2 |

| Beaver | 1 |

| Walrus | 0 |

So, how do you convert these scores into probabilities?

Standardize

One way to convert the counts to probabilities is by dividing each count by the total.

\[ P = \frac{count}{\textit{total count}} \]

The problem with this is we might not be dealing with counts and so we have to deal with negative numbers in which case the sum of the values (total count in this case) could equal zero. We need to use a function that will turn any value we have (even if it isn't a count) into a positive number.

Which function would turn every number into a positive number?

[ ]sin[ ]cos[ ]log[X]exp

The Exponential

It turns out that if you take the numbers and use them as the power of e, your values will always be positive, so to normalize our values, instead of taking the count divided by the sum of the counts, we would take the exponential of our count divided by the sum of the exponentials of all the counts.

\[ P(duck) = \frac{e^2}{e^2 + e^1 + e^0}\\ = 0.67 \]

This is the softmax function.

Implementation

Imports

import numpy

Write a function that takes as input a list of numbers, and returns the list of values given by the softmax function. This uses numpy.exp to approximate e.

def softmax(L):

"""calculates the softmax probmabilities

Args:

L: List of values

Returns:

softmax: the softmax probabilities for the values

"""

values = numpy.exp(L)

return values/values.sum()

values = [2, 1, 0]

expected_values = [0.67, 0.24, 0.09]

actual = softmax(values)

tolerance = 0.1**2

expected_actual = zip(expected_values, actual)

for index, (expected, actual) in enumerate(expected_actual):

print("{:.2f}".format(actual))

assert abs(actual - expected) < tolerance,\

"Expected: {} Actual: {}".format(expected, actual)

0.67 0.24 0.09