Multi-Layer Perceptrons

Table of Contents

This is basically like the previous gradient-descent post but with more layers.

Set Up

Imports

From PyPi

from graphviz import Graph

import numpy

The Activation Function

def sigmoid(x):

"""

Calculate sigmoid

"""

return 1/(1 + numpy.exp(-x))

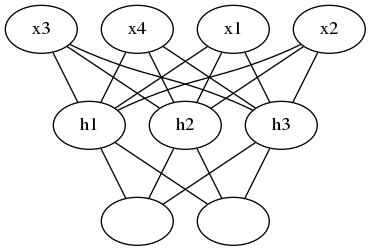

Defining Our Network

These variables will define our network size.

N_input = 4

N_hidden = 3

N_output = 2

Which produces a network like this.

graph = Graph(format="png")

# input layer

graph.node("a", "x1")

graph.node("b", "x2")

graph.node("c", "x3")

graph.node("d", "x4")

# hidden layer

graph.node("e", "h1")

graph.node("f", "h2")

graph.node("g", "h3")

# output layer

graph.node("h", "")

graph.node("i", "")

graph.edges(["ae", "af", "ag", "be", "bf", "bg", "ce", "cf", "cg", "de", "df", "dg"])

graph.edges(["eh", "ei", "fh", "fi", "gh", "gi"])

graph.render("graphs/network.dot")

graph

Next, set the random seed.

numpy.random.seed(42)

Some fake data to train on.

X = numpy.random.randn(4)

print(X.shape)

(4,)

Now initialize our weights.

weights_input_to_hidden = numpy.random.normal(0, scale=0.1, size=(N_input, N_hidden))

weights_hidden_to_output = numpy.random.normal(0, scale=0.1, size=(N_hidden, N_output))

print(weights_input_to_hidden.shape)

print(weights_hidden_to_output.shape)

(4, 3) (3, 2)

Forward Pass

This is one forward pass through our network.

hidden_layer_in = X.dot(weights_input_to_hidden)

hidden_layer_out = sigmoid(hidden_layer_in)

print('Hidden-layer Output:')

print(hidden_layer_out)

Hidden-layer Output: [0.5609517 0.4810582 0.44218495]

Now our output.

output_layer_in = hidden_layer_out.dot(weights_hidden_to_output)

output_layer_out = sigmoid(output_layer_in)

print('Output-layer Output:')

print(output_layer_out)

Output-layer Output: [0.49936449 0.46156347]